In 2011 a small study observed how people watched a scene from There Will Be Blood with eye-tracking. From that experiment you could watch a real-time overlay of how audiences’ eyes are responding to filmmaking, lighting, and performance. The results were generally what you would expect- people’s eyes tend to dart to light sources, then to faces, and then to small movement, props, or other details of the frame once they’ve taken in the scene.

In 2011 a small study observed how people watched a scene from There Will Be Blood with eye-tracking. From that experiment you could watch a real-time overlay of how audiences’ eyes are responding to filmmaking, lighting, and performance. The results were generally what you would expect- people’s eyes tend to dart to light sources, then to faces, and then to small movement, props, or other details of the frame once they’ve taken in the scene.

But what does a computer see when it’s programmed to watch a movie?

This is the question that artist Ben Grosser asked and sought to answer with his art experiment, “Computers Watching Movies.” After programming a custom algorithm Grosser began running famous film sequences through his program, which spits out a visual representation of where the program’s attention is fixed within the frame at any given point. As the artist puts it:

This is the question that artist Ben Grosser asked and sought to answer with his art experiment, “Computers Watching Movies.” After programming a custom algorithm Grosser began running famous film sequences through his program, which spits out a visual representation of where the program’s attention is fixed within the frame at any given point. As the artist puts it:

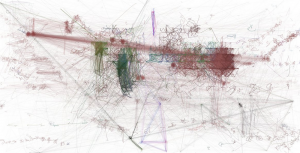

Computers Watching Movies shows what a computational system sees when it watches the same films that we do. The work illustrates this vision as a series of temporal sketches, where the sketching process is presented in synchronized time with the audio from the original clip. Viewers are provoked to ask how computer vision differs from their own human vision, and what that difference reveals about our culturally-developed ways of looking. Why do we watch what we watch when we watch it? Will a system without our sense of narrative or historical patterns of vision watch the same things?

Now obviously this particular program is going to respond in ways dictated by the particular coding Grosser included, but it opens up an interesting conversation in a world where facial-recognition and other visual-response technologies are becoming ubiquitous. Keep in mind: since this is art more than scientific research, it’s a conversation starter rather than a final word.

So, for an appropriate example, here’s the scene from The Matrix in which Neo is first told about Agents, after his walk down the sidewalk with Morpheus…

In this example you can immediately see a figure traced in the center before lateral motion mostly takes over. It’s already clear the program is reacting to much more discrete motion and changes, resulting in a more scattered, yet complete recognition of objects and profiles.

A good example of something different occuring is this clip from Inception, in which Cobb explains the dream-sharing mechanism before the dream breaks down in the famous series of fractal explosions. In this case, the program is tracking so much acute motion that the sketch becomes a semi-opague haze over the entire frame.

After seeing some of these videos, I was curious about Grosser’s thought process on the visual representation. There are a lot of ways this could be presented- the sketches overlaid atop a semi-transparent video or the sketches juxtaposed next to the original video, for example. You could have the sketch fade away consistently, so one could more acutely observe the computer’s “instincts” for watching in real-time. So how did Grosser settle on these “temporal sketches?”

When developing the work, I tried various configurations, including showing the “drawings” on top of the original video, as well as showing the drawings in sync with the original but on separate screens (I tried it so you could see both screens at the same time and also so you had to turn around to see the original and thus couldn’t see them at the same time). I found two things with all configurations that show the original video: 1) people pay more attention to the movie itself than the drawings (they can’t help but get sucked in), and 2) they (and I) lose an important interaction produced by the work. That interaction is what happens when you watch the computer sketch its vision of say, The Matrix, while you scan your memory and try to correlate what the computer sees with what you remember of the scene. This is one of the reasons why I chose well known scenes from well known movies—I want the viewer to have something they might remember well enough that this interaction can occur. In this way the work is more art and less visualization or info-based film analysis.

As interested as I am in watching this different ways, I agree that this ultimately the most interesting presentation. The juxtaposition between the program’s response to Nolan’s filmmaking versus, say, Kubrick’s filmmaking are already striking enough. That led me to wonder what kind of things we could discover about director’s and cinematographer’s work if entire films were fed through, and the results aggregated across entire filmographies. These are certainly possibilities for the future…

I agree about what might emerge with more runs of the software. Would Scorsese’s output look consistent across his films? How would it compare with Woody Allen or Kubrick? I’d also like to look across a single film—how similar is it from start to end, for example?There hasn’t been much exploration of it out in the world yet. I’ve only just started sharing it.

Grosser’s mind is an interesting one- I highly recommend taking a look through his portfolio of installations and artistic experiments. Most interesting to me is an installation that used an array of cameras fixed on a couch, which the viewer then sat on and saw the feed of themselves processed through reality-show editing. And if you’re interested in more of “Computers Watching Movies,” all of Grosser’s uploads so far are included below. You can also watch the 15m “Exhibition Cut” that collects several examples, and has screened at some festivals and conferences.

Taxi Driver